*warning! LOTs of math.

Introduction

This primer aims to explain a few important ideas in the field of Verifiable Computing and in particular, some key tools relevant to building cryptographic proof systems (CPSs). A key advantage of these CPSs is that they allow devices or entities without a lot of resources to verify or “check” that some larger entity with more resources is behaving correctly.

We start by introducing a few mathematical tools and then expand on some core cryptographic primitives. We hope that this will serve as useful introduction to those looking to understand the recent hype around proof systems and in particular, the use Zero-Knowledge (ZK) proof systems for enhancing privacy and scalability on blockchains.

The Problem

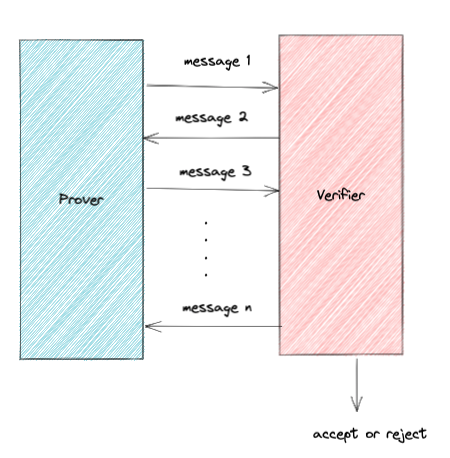

Let’s setup the problem. We have some entity (call him the Prover) and some other entity (call him the Verifier). The Prover wants to prove to the Verifier that he knows the result of some computation and the Verifier needs to efficiently check the Prover. We’ll define what “efficient” means more concretely later but let’s focus on this notion of interaction between the Prover and the Verifier. This construction can be extremely powerful! You could have an iPhone in Chicago verifying the execution of some supercomputer at Los Alamos.

The Prover sends a message and the Verifier responds with an answer that depends on the Prover’s message. The Prover responds with another message that depends on his last message and the Verifiers’ message, and so on until the Verifier accepts or rejects.

Some Mathematical Tools

These are some important mathematical tools that are relevant to our discussions on proof systems.

- Real / Complex Numbers: R,CR,C

- Finite Field of order pp FpFpwhere pp is prime.

- Group GG

- we use (*) to represent the group operator (which can be standard addition, multiplication, or any other operation that satisfies the three conditions of associativity, identity element, and inverse discussed below)

Groups

Groups play an important role in cryptography by enabling information to be hidden from eavesdroppers.

Definition: A group is set with a binary operation *, an identity element, and an inverse map (i.e. for all members of the group, there exists another element of the group such that ei ∗ej=1ei ∗ej=1. The operation also has to satisfy associativity: (x∗y)∗z=x∗(y∗z)(x∗y)∗z=x∗(y∗z)

Types of Groups

- Abelian groups are groups that ensure that if we two group elements ii and jj, i∗j=j∗ii∗j=j∗i

- Cyclic groups are a special kind of abelian group where the elements of the group can be generated by a single group element (let’s call this the generator gg of the group). This means that if we start off with a single group element gg and apply gg to this repeatedly via the group operator (g∗g,(g∗g)∗g),...)g∗g,(g∗g)∗g),...) then we can obtain every single element in the group.

In cryptography, groups are really just objects to run computations over. Groups are particularly useful because: (1) they can help predict memory usage; (2) allow you to draw random numbers (you would find it very difficult to sample a random element from something like the real numbers – the probability of getting any real number is 0 because it's of infinite size).

The Power of Groups: Diffie-Hellman Exchange

Groups are powerful mathematical objects - let’s take a look at an important use case of groups in cryptography. The Diffie-Hellman Exchange allows two parties, say Alice and Bob, to exchange some secret ss with each other without an adversary eavesdropping to get access to this secret.

- Alice and Bob publicly agree on a generator gg and the Group GG

- Alice picks a private number aa at random and computes gaga and sends to Bob

- Bob picks a private number bb at random and computes gbgb and sends to Alice

- Alice computes (ga)b(ga)b

- Bob computes (gb)a(gb)a

Notice that by the rules of exponentiation, (ga)b=(gb)a=s(ga)b=(gb)a=s— Alice and Bob have both been able to compute a shared secret! Now suppose some adversary AA attempts to also compute the secret ss without having access to aa or bb - can they do it? Let’s answer this by taking a simple example of a Group: ZpZp (the integers modulus pp, where pp is some very large prime number). For AA to compute gabgab they would have to find some x=abx=ab such that gxmodp=sgxmodp=s. This is known as the discrete-logarithm problem. For large prime numbers ppit is computationally infeasible to find such a solution xx. This is well trusted to be a hard problem — factoring primes has been known to be hard since the Greek polymath Eratosthenes (see Sieve of Eratosthenes). In fact, no one has broken this system in 2000 years!) The hardness of discrete-logarithms serves as a core foundation for modern cryptography, including the development of public-private crypto-systems like RSA.

This example leads to another commonly used idea in crypto systems: The Computational Diffie-Hellman Assumption: Givengaga and gbgb it is computationally infeasible for an adversary to compute gabgab. We won't give the precise definition of “computational feasibility” here, but the intuition of this idea is helpful to understand—in essence, it is nearly impossible for someone to know the secret gabgab unless they have knowledge of both aa and bb. See the full definition here.

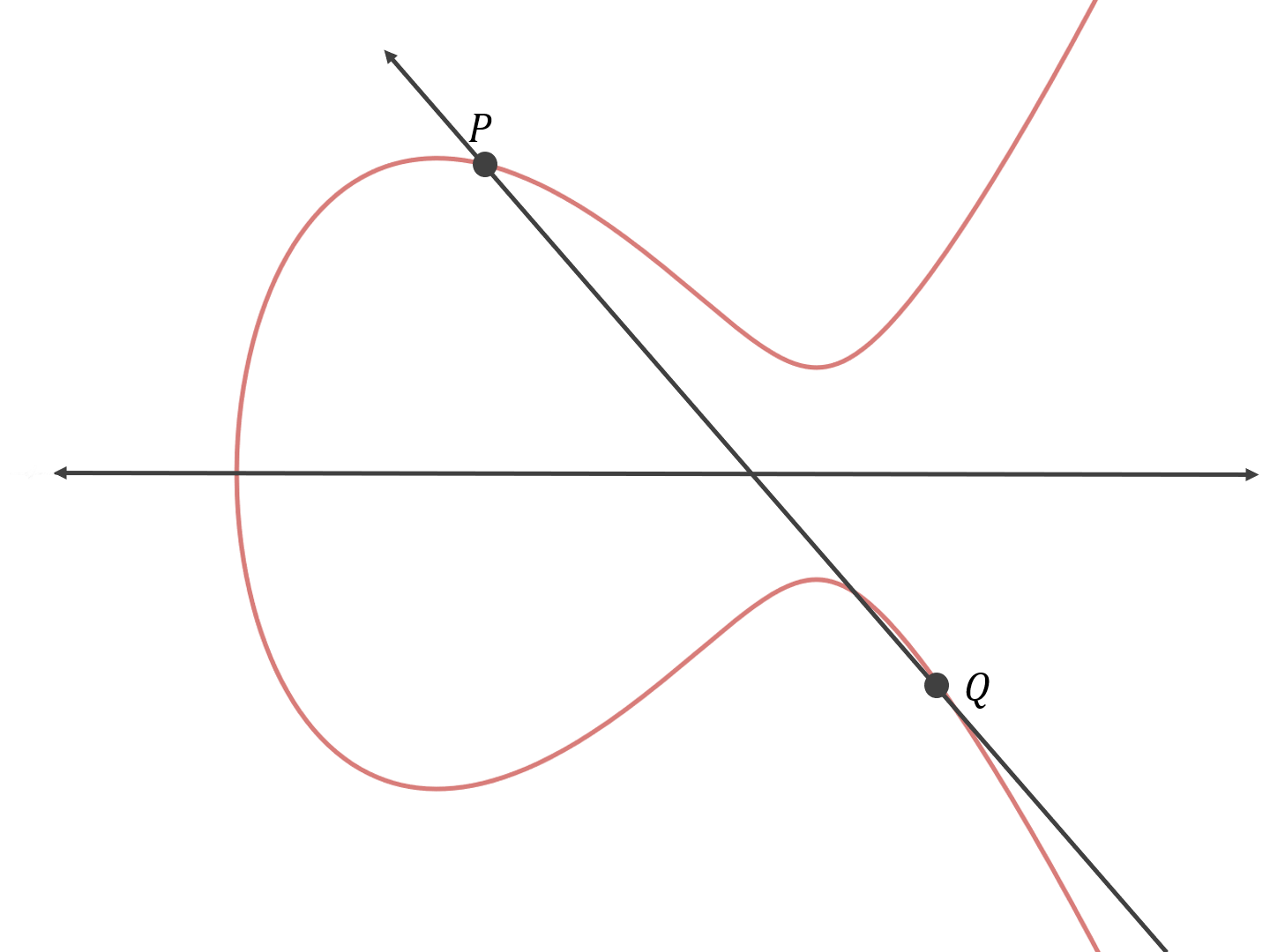

Elliptic curves (ECs) a core part of modern cryptography and while they are used extensively in blockchains (starting with Bitcoin), they are not a new concept. ECs are really just a special type of group that satisfy the Diffie-Hellman Assumption.

The theory behind elliptic curves is fairly mathematically intense, and we point you to the primer referenced below as a relatively intelligible guide to EC cryptography. That being said, we can summarize the intuition behind ECs in words. An elliptic curve is a set of points that constitutes a group under the following operation: given 2 distinct points PP and QQ on the elliptic curve, as shown above, a line connecting those 2 points is guaranteed to intersect the elliptic curve at exactly one more point RR ; we reflect RR across the axis that splits the curve in half to obtain a point SS also on the curve, and we conclude by defining P∗Q=SP∗Q=S.

An EC cryptosystem is defined by picking a finite field of size mm where mm is prime, a curve equation (i.e.y2=x3+ax+by2=x3+ax+b) and a public point PP on the curve. A private key is a number t<mt<m, and a public key is pp applied to itself tt times via the * operator. Call this operation of applying pp to itself tt times via gp(t)gp(t). It is difficult to compute one’s private key tt given knowledge of only their public key, and since we have:

gp(t1,t2)=ggp(t1)(t2)=ggp(t2)(t1)gp(t1,t2)=ggp(t1)(t2)=ggp(t2)(t1)

it is possible for two parties Alice and Bob with private keys t1t1 and t2t2, respectively, to share a secret that no one else can compute without having knowledge of t1t1 and t2t2. This satisfies the conditions of the Diffie-Hellman exchange perfectly! Here’s a helpful intuitive primer on elliptic curves as well.

So what did this accomplish? We can use relatively small keys to achieve similar security guarantees as other crypto-systems like RSA.

Prime Fields

You may have heard of fields. This can be the real numbers or the rational numbers or the complex numbers. A finite field is a set that just contains a finite number of elements for which we define operations like addition, subtraction, division, and multiplication. But how do we actually achieve this? If you add or multiply an element in a field some number of times, you should get back a number in the field. Let’s take a concrete example. Say 11 is an element of this field FF . We can add 11 to itself nn number of times (nn is prime). How do we ensure that the result of this computation returns an element that is still a member of this field? One way is simply adding a remainder function to the end of the computation.

(1+1+...+1)modn(1+1+...+1)modn

More generally, if an operation (addition / multiplication) is applied to some element ee some mm number of times, where m>nm>n, you will always still get an element e′e′ that is a member of this field! The more mathematically experienced reader may note that any field is in essence a group with an additional operation satisfying certain criteria, but we will not delve into those details in this primer.

Polynomials

As we’ll see later, polynomials play a crucial role in cryptography and the security guarantees offered by many cryptographic proof systems.

Definition. A univariate polynomial pp of total degree dd over some finite field FF is defined as follows:

p(x)=∑i=1daixi where ∀i,deg(xi)≤dp(x)=i=1∑daixi where ∀i,deg(xi)≤d

One intuitive reason that polynomials are useful in cryptography is that they are able to represent a large amount of information very succinctly. Think about the following concrete example. Imagine creating a table of numbers. In the first column you start writing down the natural numbers in order (i.e. the integers starting from 11. Call this the domain. Now, in the second column start writing down random numbers from the real number line. If we interpolate across these points, we can create a polynomial that represents all of this information at once! Practically, we treat can a polynomial as a continuous representations of a large truth table over some domain.

Schwarz-Zippel Lemma

Here’s where polynomials can come in handy. The Schwarz-Zippel Lemma is a core tool for ensuring the soundness of many CPSs.

Definition. Given two distinct univariate polynomials p,qp,q with deg≤ddeg≤d over some finite field FF,

Pr[p(x)=q(x)]≤d∣F∣Pr[p(x)=q(x)]≤∣F∣d

Intuitively this means that if two low-degree polynomials are not identical, they disagree at almost all points in the field. Typically, a CPS will convert some computation (written is some higher-level abstraction - think Solidity code) to polynomials. We can then leverage this lemma to ensure the soundness of the protocol.

Some Cryptography Tools

There are two main properties we want CPSs to have: (1) Completeness; (2) Soundness. With a deterministic verifier, completeness and soundness are defined as follows:

- Completeness - given a truthful claim, the Verifier should always accept.

- Soundness - the Verifier should not ever accept a false claim.

Now, let’s let the Verifier flip some random coins (i.e. make the Verifier non-deterministic)

- Completeness - the probability that a Verifier will be convinced by a true claim (typically we want this to be 11 ).

- Soundness - For all Provers (malicious as well), the probability that a verifier accepts a false claim should be low.

Notice that the difference here is very subtle but crucial to cryptographic proof systems - randomness allows us to construct proof systems that can run efficiently (typically this means that the Verifier can run in polynomial-time).

The Sumcheck Protocol

The sumcheck protocol is one of the fundamental building blocks for interactive proof systems. In essence, this protocol is defined by the following context: a prover wants to prove to a verifier its knowledge of the sum

H=∑x1,...,xn∈{0,1}g(x1,...,xn)H=x1,...,xn∈{0,1}∑g(x1,...,xn)

where gg is a polynomial in nn variables. We assume the Verifier has oracle access (i.e. they can cheaply evaluate) g(r1,...,rn)g(r1,...,rn). The Prover and Verifier want to engage in this process relatively efficiently, and Sumcheck allows them to do so iteratively. What follows is an intuitive description of the Sumcheck protocol.

Instead of evaluating the polynomial at all possible evaluations (exponential time problem), we want the Verifier to leverage interactions and randomness. Effectively, we “strip” off each of the operators at every round of the protocol by binding each variable to some random element from the field. First, the prover supplies a polynomial g1(x1g1(x1that is supposed to represent this:

H=∑x2,...,xn∈{0,1}g(X1,x2,...,xn)H=x2,...,xn∈{0,1}∑g(X1,x2,...,xn)

or the sum over all variables except the first, which is free. One can see that H is the sum of g1g1over all possible values of X1X1 and indeed, the Verifier checks that this is the case—that summing g1(0)g1(0)and g1(1)g1(1)does indeed evaluate to the sum HH. If this is not true, then clearly the Prover does not know the sum, and the Verifier can state this to be the case. If this is true, however, the verifier cannot be certain that the Prover knows the sum—the Prover may have just gotten lucky in picking a g1g1 whose sum over all values of X1X1 happens to evaluate to HH— but the probability that the Prover indeed knows H has increased. The Verifier then randomly samples a value r1r1for X1X1 and sends that to the prover, who is now tasked with sending back a polynomial g2(x2g2(x2 that represents the sum over all variables except the first, which is set to r1r1 now, and the second, which is now fixed. One can see the iterative pattern here: the Verifier will check that gi−1(Xi−1)gi−1(Xi−1)provided correctly from the last iteration is equal to the sum of gi(Xi)gi(Xi) provided in this iteration over all possible values of XiXi. If at any point the Prover fails to provide a correct polynomial, it will be rejected by the Verifier; every time it succeeds in providing a correct next iteration, the probability that it actually knows H and isn’t just getting lucky increases. By the end, if the Prover provides the last iteration (a polynomial gn(Xn)gn(Xn)) correctly, the verifier will conclude that the prover knows HH with very high probability.

Linked here is an in-depth description of Sumcheck and some of the details about the iterative process and the probabilistic guarantees. We won’t walk through any examples here - the work can get a bit tedious but Thaler provides a nice more in-depth walk through of the application of Sumcheck to some interesting canonical problems here.

So why is this useful?

- Now that we understand

Sumcheck, let’s treat it as a blackbox algorithm we can leverage to efficiently prove that some computation is actually valid. - The high-level approach to using

Sumcheckis to take some computation that you wish to design a protocol for and rewrite in terms of a sum over a boolean hypercube. By reducing our problem to an instance ofSumcheck, we can just blindly apply it!

The GKR Protocol

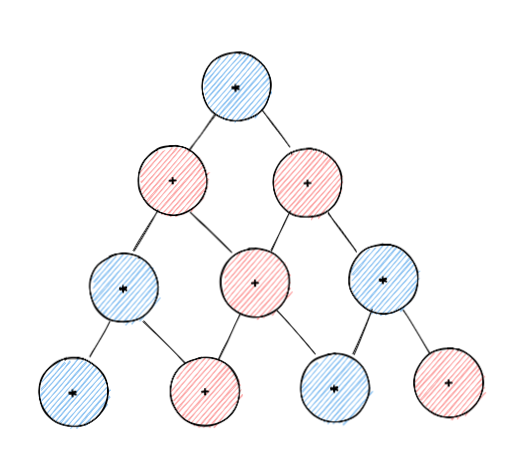

Let’s take a look at a concrete use case for Sumcheck. The GKR protocol is a fascinating and clever protocol for efficiently verifying computation that can be expressed as a low-depth arithmetic circuit. This was one of the first attempts at coming up with a system that could verify a very general class of computations (not just sums over the boolean hypercube). Incidentally, it uses Sumcheck as an algorithmic backbone!

Here’s the basic construction - we have some computation that can be expressed in different layers. Naively, we could have the Verifier just re-run the computations of the circuit and check whether his output matched the claimed output. But this is seriously inefficient. Instead, let’s try and use the structure of the circuit to reduce the time complexity of the Verifier.

Here’s the basic intuition: we reduce a claim about layer i of a circuit to a claim about layer i+1. We follow this process inductively to reduce the claim all the way down to just evaluating a polynomial at a set of random points instead of evaluating the entire circuit.

We won’t go through the full description here because it is tedious but here’s a full description of the GKR protocol for those who are inclined.

WTF is an Arithmetic Circuit

We’ve discussed a few mathematical objects like polynomials that are useful in building crypto proof systems but we haven't talked about how we might actually compute them. Arithmetic circuits are an easy and clear way to represent the computation involved in evaluating a polynomial. An arithmetic circuit is similar to logical circuits used in computers and electronics. In electronic chips, we have a bunch of connected gates (AND, OR, NOT, XOR, etc..). We give these gates some input and feed the output as input to the next gate. Instead, we replace those logical operators with arithmetic operations like addition and multiplication. We get something like this:

Polynomial Commitment Schemes (PCS)

PCS are extremely important to the function of CPSs. At a very basic level, it ensures that the Prover can’t cheat while interacting with the Verifier; i.e. the Prover shouldn’t be able to change his previous messages based on the random points the Verifier is drawing. (we call this property non-adaptivity). Think of the Sumcheck protocol above - we want the Prover to commit to the polynomial before the Verifier sends the random point (commitment schemes are a robust way to ensure that the Prover is behaving honestly). We’ll see that polynomial commitment schemes play a key role in ensuring the security of cryptographic proof system in a later piece.

Here’s how this would work:

Provercommits to some reference string- How does the

Verifierknow that the Prover actually committed to this polynomial? Verifierasks theProverto evaluate the polynomial at some random pointProversends back the evaluation of the polynomial at some point on the Elliptic Curve

Conclusion

In this article, we described some of the core mathematical and cryptographic tools you will need to know to understand and evaluate cryptographic proof systems. We hope you found this piece both exciting and helpful in demystifying some of the often arcane “moon math” that is involved in building your favorite private L1, scalable L2, or ZK-powered application. With this background, hopefully you are better prepared to dig into some of the inner workings of other proof systems.

Please reach out to (Rahul Maganti (@rahulmaganti_) or Anirudh Suresh (@anihamde) if you have any questions or comments, and tell us what we got wrong! Thanks to Conor Patrick (@_conorpp) and Emanuele Cesena for their feedback!

References

- https://en.wikipedia.org/wiki/Computational_Diffie–Hellman_assumption

- https://en.wikipedia.org/wiki/RSA_(cryptosystem)

- http://staff.ustc.edu.cn/~mfy/moderncrypto/reading%20materials/Introduction_to_Modern_Cryptography.pdf

- https://o1-labs.github.io/mina-book/crypto/overview.html

- https://people.cs.georgetown.edu/jthaler/ProofsArgsAndZK.pdf

- https://people.cs.georgetown.edu/jthaler/GKRNote.pdf