In an upcoming series, we’re going to dive into the guarantees that different chains make about finality and the block production/consensus mechanisms that produce those guarantees. Before we get too into the weeds, though, it’s worth taking a critical look at what we mean by finality in the first place, and why it’s an especially relevant concept for cross-chain protocols.

The importance of finality arises from a fundamental problem in the design of decentralized, distributed systems—individual entities can have different views of the system’s state, and there is no authority providing a source of ground truth. As a result, there’s no quick and easy way to be certain that your particular view is shared by other participants. For many blockchain protocols, it’s possible that as you track the state of the chain, new information you receive from the network causes you to revise your previous view, resulting in a block reversion. At the protocol level, different chains take different approaches to provide the assurance that a block won’t be reverted after some point in time or, equivalently, that honest participants in the network will eventually converge on the same view of the chain, which is the rough idea that the term “finality” captures, at least when speaking generally.

When we talk about finality in the abstract, we’re usually not referring to a hard technical specification but a more general property of eventual consistency that makes consensus possible, where the implementation details are unspecified. The term only acquires concrete meaning when we examine the workings of a specific protocol and the kinds of guarantees it can produce. Despite this, it’s often used in the same way to talk about protocols with very different guarantees.

When speaking casually, it may make sense to gloss over the details like this. To an ordinary user making a transaction, the specifics of their chain’s underlying protocol and how it provides security don’t necessarily matter. As long as there exists some kind of mechanism that assures them that once their transaction goes through, it won’t be reverted, the details of how that’s accomplished don’t have to cross their mind. However, cross-chain bridging is a context where the subtleties of finality naturally come to the forefront of considerations in the design of a safe, robust, and user-friendly protocol. In order to tease out these subtleties, we’ll first examine Bitcoin to see one particular instance of what the term “finality” can imply, back out to a broader discussion, and reason about the fundamental limits to what finality can guarantee as an in-protocol property beholden to social consensus. After outlining some of the variable factors that accompany a general understanding of the term, we’ll explore how these factors precipitate an array of considerations for bridge design, some of them security-critical and some more relevant to practical UX. We’ll start off where things began: the Bitcoin protocol and Proof of Work.

Back to Basics

If you don’t need a refresher on PoW, feel free to skip to the next section.

When we talk about Nakamoto protocols (i.e. a longest chain-type fork choice rule plus Proof of Work), finality is usually understood as some fixed number of blocks built on top of the one containing your transaction in the longest chain, after which you and the recipient can be confident that it won’t be reverted. Exchanges, for example, will typically accept Bitcoin deposits after around 4 to 6 block confirmations. To understand what motivates these kinds of requirements, let’s consider an extreme example: What if we accepted a transaction after just a single block confirmation, i.e., as soon as a block containing it has been mined? We know this is dangerous of course, since the head of the chain can be orphaned/ommered (we’ll see how below). For the sake of building some intuition, though, we’ll walk through exactly how things can go wrong by doing this and how this informs the notion of finality for PoW protocols.

Let’s lay some groundwork first. When considering block safety, in the context of any protocol, it’s useful to take the perspective of a single client observing the network and try to reason about how your local view can change over time. What kind of information could you receive from other network participants that would cause you to revert the blocks you see? Relatedly, under what conditions are you ready to believe that your view of the chain at a given height will be the same as everyone else’s? The assurance that every node following the protocol will eventually agree in their local views (at least up to a certain point) is what makes the abstraction of a consistent “final” state, the state of the singular, canonical blockchain, meaningful.

Going back to Proof of Work, let’s see what can happen to our view as we track the head of the longest chain—remember that under our toy heuristic, we’ll choose to confirm any transaction if we see it at the head. Suppose we’re interested in a transaction, and we receive a mined block, call it block A, that includes it and also points back directly to our current head of the chain. Because block A extends the previous longest chain we saw, it becomes the new head of the longest chain in our local view, and so we choose to accept the transaction.

The problem is that the protocol runs asynchronously—miners themselves only have their picture of the chain to build off of, so it’s possible that a different miner produces a block B at the same height before they hear about block A being built and propagates block B through the network as well. At the point when we’ve seen both A and B, we no longer have an unambiguous longest chain, and we have to wait for the tie to be broken for the head to be re-established.

If the next block we see points back to B instead of A, the new longest chain will no longer contain A, meaning it was effectively reverted in our view. This phenomenon of block reorgs is to be expected since the Bitcoin protocol is fundamentally designed around not a linearly progressing chain of valid blocks but a tree of them, which is disambiguated by a fork choice rule. To be more literal, a software implementation of the protocol like Bitcoin Core, whose job is to manage your local view of the chain, will happily revert a block sequence of any length, right up to genesis, if the right conditions are met (i.e., if it sees a longer fork than the one it’s on right now) [1].

The key to security, of course, is the economic paradigm imposed by Proof of Work—there’s a material cost built into the block production mechanism, so ordinary miners are incentivized to build on the longest chain since they will only be rewarded for their expenditure if their mined blocks are included in the winning fork. This means that honest participants will eventually come to a consolidated view of the chain history (under the key assumption that they collectively own a majority of the hashpower), which is where the need for multiple block confirmations comes in.

From an adversarial standpoint, PoW is also what disincentivizes attackers from trying to effect large reorgs for the purpose of a double spend—producing the required fork would require competing with the combined honest hash power of the chain, and the cost of doing so scales with the depth of the attempted reorg. We won’t get into the full game-theoretical considerations of the system here—the key idea to take away is that the type of block finality offered by protocols like Bitcoin is an emergent property of the protocol and the incentives designed around it. It isn’t guaranteed by any kind of physical law, but rather by whether those incentives are robust enough to function in an adversarial environment.

So the protocol will tell you the longest chain that exists in your local view (based on the information it receives from the rest of the network)—still, it’s up to you to interpret this result and apply some confirmation rule (e.g., accept blocks with >N confirmations) to decide what to consider fully confirmed or “final.” There’s no special property that a block with six confirmations possesses compared to 5 or fewer—it’s just a point on an integrally-scaled tradeoff between wait time and security (likelihood of a reversion) that’s good enough for most practical use cases [2].

Is Finality Really Final?

Stepping back for a bit, if we want to try to pose a more general definition of finality, it should necessarily be quite broad and taken with a grain of salt since we know the term does a lot of work abstracting over a heterogeneous set of assumptions and mechanisms. Given what we’ve discussed so far, maybe we can say something like this: A finalized block, under a given protocol, is one that will never be reverted or will be reverted with some negligible probability, in the local view of anyone adhering to the protocol, under some kind of assumption about the behavior of network participants (e.g., some proportion of network resources are honest).

We’ve already discussed how we need to be careful in our reasoning about those assumptions—they aren’t just a given, and so we should consider both how economic mechanisms like PoW secure them and how the network will behave if they are broken. Another fundamental caveat to this definition is that it’s only meaningful given a fixed set of protocol rules and, more generally, a continued agreement among network participants on the progression of the chain, apart from what is decided by in-protocol consensus.

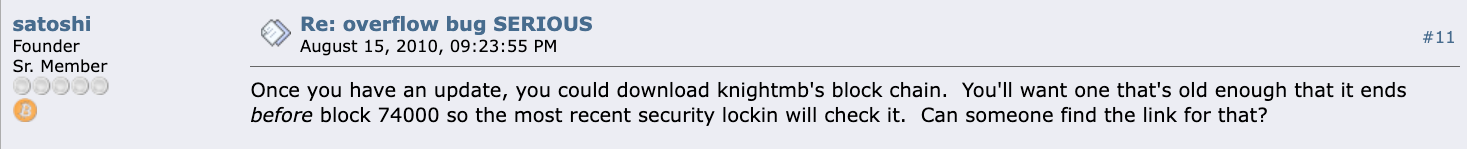

In 2010 (the early days of Bitcoin), an attacker exploited an overflow bug to credit themselves with transaction outputs that exceeded the value of their inputs. By the time someone noticed the transaction, it was already over a dozen blocks deep, and anyone following the chain with their own node would have considered it valid if they had simply accepted the output of the protocol. The overflow bug itself was fixed by a simple update to the client code—however, the attacker’s UTXO’s would have remained in the state if miners had done nothing else. Instead, those running full nodes literally deleted the blocks that had been built on top of the attack transaction in their local databases and started rebuilding the chain from there. Blocks that would have been considered finalized under a rule like “greater than six block confirmations” were reverted, but this wasn’t because Bitcoin’s security was compromised—rather, the community observing the chain and manually examining the state updates being produced decided that those updates didn’t match how they wanted the protocol to behave and agreed on a new chain running a revised version of the protocol.

A similar pattern played out in response to the DAO hack on pre-merge Ethereum—due to the finality of the attacker’s transactions and thus the difficulty of reaching a solution within the limits of the protocol. A hard fork reverting back to a block before the hack occurred was eventually the only solution that was able to mitigate the problem. Note that these reversions had nothing to do with the finality of these chains being probabilistic or “soft”; instead, the participants in the network collectively and subjectively decided to consider some transactions as invalid, since even though they adhered to the protocol, they broke some higher-level shared assumptions about how the chain should function.

Another protocol we’ll explore later in the series is Tendermint, which has a nice property sometimes referred to as “hard” finality, which guarantees that blocks won’t revert (assuming a certain set of assumptions hold!). Cosmos was the first chain to use Tendermint for consensus, and although it has never seen a reverting hard fork, its community also acknowledges the importance of the option for state-reverting hard forks in response to a major attack on the network:

“…the technology underlying the Cosmos Hub was intentionally developed to enable low-friction forks and rollbacks. We’ve seen the community practice these techniques numerous times on the test networks. It’s likely they will need to be used on a mainnet as well.” [source]

The fact that a Tendermint chain (which gives hard finality) was designed to easily facilitate rollbacks (which revert finality) isn’t a contradiction, since these two mechanisms operate on different levels. Finality, whatever form it comes in, is an in-protocol mechanism, while rollbacks are an out-of-protocol, socially determined one. We’ll come back to Tendermint, how it provides “hard” finality, and what that term means more concretely in another post. For now, we simply want to reiterate that this caveat does not arise from any property of Nakamoto protocols in specific. Rather, it applies universally to all protocols since, fundamentally, hard forks are an out-of-protocol measure.

Here, we’ve reached the limits of what finality, however it may be constructed, can guarantee. It offers protection against attacks or local inconsistencies related to protocol consensus that might threaten a block reversion, but it does not (and cannot) guarantee anything about social consensus, which is the ultimate determinant of the canonical state. Miners or validators can always step outside of the protocol itself, start up a new, distinct chain with revised state or protocol rules, and agree to switch their resources to that one. The hope here, of course, is that this can’t be achieved trivially—here lies the root of the importance of decentralization, which acts as a check against the ability of a single party to arbitrarily modify the protocol or state, and therefore bolsters (or weakens, when it’s lacking) the legitimacy of the chain itself. In the end, while this process is inherently subjective, once there is a collective agreement by the committed network participants to switch to a hard fork, there is an objective reason for users to accept that new chain as canonical, since it will retain the resource commitments (e.g. hash power or stake weight) that ensure its security [3].

Where does this leave us? In addition to being dependent on a set of core assumptions, finality only has meaning given fixed social consensus on protocol rules/state. A more relevant definition for a finalized block, then, is one that can be invalidated only by a state-reverting hard fork, which we've seen can be a part of the lifetime of a major blockchain.

As an ordinary user, once your transaction is finalized on any chain, you can remain very confident it will stay that way, as long as there isn’t a massive attack sitting in the same block or before it, and the network is sufficiently decentralized. Ultimately, though, a finalized transaction is not something you can immediately bet your life on, due to the possibility of a state-reverting hard fork accepted by social consensus superseding the previous canonical chain.

All or Nothing?

Deep consideration of consensus models is important not only for understanding critical cases like rollbacks (we’ll return to this in the next section) but also for informing the design of a bridge more generally. Speaking loosely, let’s talk about a bridge in the general sense as a protocol that produces attestations to the state of a source chain, which can be verified in the execution environment of a destination chain. The importance of finality is immediately obvious since, intuitively, it’s only safe for a bridge to produce an attestation to state once it knows that it’s achieved an appropriate level of block safety and can confidently be considered canonical (i.e., won’t be reverted).

Going off of this casual definition, we can identify two major pieces essential for any general-purpose bridge. One of them is what we might consider the “backend” of the protocol—the core architecture which hooks into each of the chains it connects and verifies the state of consensus. The “frontend” has to do with the consumable attestations to specific chain state that a bridge then produces after processing consensus—their format and the kind of information they tell you about the chain, and any higher-level abstractions built on top of them, which in turn determine what use-cases are possible and how developers integrate them into cross-chain apps. The set of assumptions we make about consensus generates the design space in which both these aspects exist and intertwine. To explore this, let’s consider a strawman model, where we make the simple assumption that a consensus protocol produces a chain where the only measure of block safety is whether a block is “finalized” according to some boolean condition, and a bridge attests to a piece of state only when it satisfies that condition.

On the frontend side, thinking of finality as a binary condition that must hold for a bridge to confirm a transaction would be a nice mental model and would certainly make for a friendly interface, but there are some clear pitfalls to adopting it as a universal standard. For one, this abstraction doesn’t map cleanly to all protocols—as we saw for Bitcoin, finality doesn’t actually have a well-specified, unambiguous meaning, and it’s really up to an individual to determine according to their preferences for latency versus risk. Thus, designing off of this assumption means that you have to embed that subjective decision somewhere into the core protocol that is forced onto all downstream users.

The flip side is that it means users also lose out on useful information about block safety that some chains provide natively but that fall outside of a binary categorization. For a Bitcoin transaction, for example, knowing the number of block confirmations is strictly more expressive than being told it’s either “finalized” or not since the way we define finality in this context is as a function mapping that value to a reduced range. Why does this kind of information matter? A token bridge supporting asset transfers should require a very high level of block safety before it confirms transactions—something like a high number of block confirmations or full PBFT finality. On the other hand, for an application like cross-chain e-mail, there might not be as high of a cost associated with a transaction getting reverted, making it safe to confirm transactions after a single block confirmation or according to some pre-finality safety condition—this concept can apply in other cases where the messages being bridged don’t imply a direct transfer in value—oracle updates, in some cases, are another good example.

Another interesting idea made possible by this kind of information is creating mechanisms that match users to a third party willing to complete their bridge transactions ahead of finality. While a token bridge itself shouldn’t be designed to disburse bridged assets until finality is reached, you could construct a protocol on top of it where withdrawals are treated as tokenized debt that can be sold by a user to a liquidity provider, which from the user’s perspective looks like an early withdrawal. In this way, the reversion risk is assumed by the LP (which is compensated by a fee paid by the user), while the bridge itself assumes no additional risks without requiring additional trust assumptions.

More generally, rather than insisting on some generalized notion of finality (which will inevitably be a somewhat rickety construction) as a singular metric, a more flexible principle is to try to give more granular information about the confirmation status of a transaction and allow the protocols actually using those messages to decide how to interpret that information, and what tradeoff to make between reversion risk and latency [4]. Having access to more meaningful information about block safety and the correspondingly novel possibilities for application design that emerge from these more nuanced models make it worth thoughtfully considering these kinds of tradeoffs, rather than labeling a potentially arbitrary point in each protocol as “finality” and calling it a day.

Bringing our focus to the backend, building an interface that can plug into any consensus model arbitrarily has no immediately obvious solution, and the more chains we add to the mix, the messier the problem gets. Tendermint consensus is based on voting and weakly synchronous rounds, resolving view fragmentation in the process of block production itself. Some protocols, like Ethereum, adopt a hybrid model with both a fork-choice rule like Bitcoin (LMD GHOST) and a round-based PBFT-like mechanism like Tendermint (Casper FFG), so the chain can progress asynchronously while still eventually achieving “hard” finality—other examples of this are Near (Doomslug/Nightshade) and Polkadot (BABE/GRANDPA).

Layer 2 protocols are another interesting study — optimistic rollups, for example, offer progressively stronger assurances of safety through sequencer receipts, L1 block posting (and finalization), and ultimately the passage of a fraud-proof period, while on zk rollups, blocks are final as soon as their execution proofs are verified on the L1 and finalized there. Solana’s TowerBFT, Avalanche’s Snowball, and DAG-based BFT constructions like Tusk and Bullshark are yet more incongruous models, among others, with their own approaches to finality to understand and consider. We’ll dig deeper into some of these in later posts; for now, our purpose is simply to highlight the breadth of complexity that must be dealt with if we want a solution that covers all of them.

Another dimension to consider is where you’re actually running the computation to track consensus and how this is verified on-chain—we’ll save the discussion for later as well, but this leads to the consideration of different solutions like validator/multi-sig, light client, and optimistic bridges. For now, we simply want to acknowledge that there’s a tension between the heterogeneity of these consensus models, and thus the need for an expressive model of block safety on the one hand (so that we can effectively integrate many different chains) and the desire for a unified, approachable API, which allows developers to build cross-chain applications without requiring deep domain knowledge of these different models, and that effectively designing along this tradeoff requires a thorough grasp of the mechanics of each individual chain.

The Bridging Dilemma

Earlier, we discussed how the state of a chain is ultimately subject to social consensus, which means that a transaction is only really canonical if the participants in the network collectively agree it’s valid, outside of what the protocol itself outputs. While we don’t have to worry about ordinary blocks being reverted arbitrarily after finality (if we believe the network is sufficiently decentralized), it’s important to keep in mind that rollbacks are still a back-pocket option that a network may take if a major attack puts the chain into crisis.

The potential for rollbacks (when thinking about bridges) produces a difficult edge case, since a well-defined notion of a bridge is inherently dependent on the consistent state of the source chain. Once a transaction has been “bridged”, which means that an attestation to the transaction on the source chain has been produced, and a transaction that verifies that attestation succeeds, that successful verification will be written to and finalized on the destination chain, regardless of whether the original transaction has been reverted by a hard fork on the source chain.

If a source chain transaction does end up getting reverted by a hard fork, the destination chain will retain a view that’s inconsistent with the source chain. There’s no simple way to resolve this since the bridge attestation, as well as any subsequent state changes that result from it, are subject to the destination chain’s finality rather than the source chain. As Vitalik Buterin has explained using an example with a double spend, any kind of attack, even at the consensus level, can be fixed by a rollback if the participants in the network agree it’s necessary, but rolling back a bridged transaction would require reverting both the chain it originated from as well as reverting the destination chain to invalidate the corresponding attestation, which is a far less feasible coordination problem.

The point above is critical to acknowledge in regard to the limits of the guarantees a bridge can offer. And beyond just the inherently limited guarantees of finality we’ve seen, the security of a bridge is also heavily dependent on the actual implementation of its core protocol and smart contracts, where vulnerabilities at any level of the stack pose a potential threat to the protocol as a whole. However, we contend that this is not a reason to give up on bridging altogether, or to determine that safe bridging is impossible. The promising potential for composable cross-chain utility that bridges offer means that many parties are already building solutions and designing in response to these hard problems, and will continue to do so, potentially alongside new builders, as the space continues to grow.

Practically speaking, then, the future isn’t divided between the possibilities of a world with or without bridges—rather, the distinction is between worlds with bridges that are either rigorously designed for safety or not. The goal to strive for is for bridging protocols to develop an arsenal of safety mechanisms that specifically target and mitigate different risks. There are effective solutions that, while sometimes making a necessary tradeoff to UX, can address major attack vectors. A token bridge, for example, can rate-limit transfers proportionally to the value of the resources securing the source chain, which mitigates the potential impact of a bridged double spend attack from being virtually uncapped down to a manageable value that doesn’t threaten economic contagion. For implementation-level security, meaningful bug bounties and external audits (along with high-quality, red team mindset developer practices) are a baseline must-have for serious protocols.

We’ve also discussed how blockchains are not just technical protocols but also networks of participants that run protocols with an inherent subjective dependency on social consensus. A bridge itself, then, cannot exist just as its core technical architecture, so decentralized governance to handle potential non-determinism is another essential component. Bridges, as well as building on-chain more generally, are an inherently risky business, but the hope is that the teams building them will rise to the challenge, effectively addressing threats across the board with in-depth countermeasures.

Conclusion

In this post, we discussed Bitcoin in order to explore one particular example of how a decentralized protocol achieves eventual consistency, producing the “finality” that we depend on in order to safely transact. We then backed out to a more general description of finality, which sets the stage for a discussion of how other protocols use different underlying mechanisms to achieve this same high-level property, and the way this informs the design space that generic bridging protocols grapple with (with a brief digression on security). In the series to come, we’ll continue with this topic, exploring consensus protocols used by other chains and how we can adjust our general model of finality to account for them—stay posted.

Huge thanks to Ben Huan, Nihar Shah, Rahul Maganti, Chase Moran, and others for the feedback.

[1] The actual fork choice rule used in Bitcoin is the highest total difficulty, which is just a more sophisticated version of the longest chain rule where each block has a variable weight.

[2] If we want to be a bit more formal, if we model block production as a binomial process with an attacker holding some fixed proportion of hashpower, we can show that the attacker’s odds of successfully reorging a given block drops off exponentially as the chain progresses. So, given an honest majority of hashpower, your confidence in a block can’t be exactly 100%, but it can approach it asymptotically as you see more confirmations. The more meaningful criticism of Proof of Work’s security isn’t that being probabilistically certain isn’t good enough—an asymptotically small distance from certainty, if we’re being Bayesian about things, is arguably a good definition of certainty itself—it’s that the resources required for block production are uncorrelated to the asset being secured by the blockchain itself, as well as other undesirable properties like attacks being repeatable upon success and difficult to attribute.

[3] It’s not always an all-or-nothing allocation of course, as in cases like BTC/BCH and ETH/ETC. In that case, the two forks both continue with their respective share of network resources, but a fork with only a minority share of resources can suffer from the reduced security.

[4] Providing full granularity is, of course, too drastic as well—for Ethereum, this would imply providing a view of the latest attestations of all 400,000 or so validators. At that point, your bridge wouldn’t actually be doing much, requiring integrators to do the full work of implementing consensus logic.